What Would Happen If We Created AGI?

This is the big question surrounding AI. In reality, nobody knows, and I'm not going to claim that I do either. I'm merely going to speculate, hopefully coherently, as to what I think could happen and what I think won't happen if we create AGI (Artificial General Intelligence). The predictions flying around out there are all over the map, so it's likely at least some people will be at least partially right, but things will likely go differently than anyone can possibly imagine simply due to the nature of what we're trying to predict.

This is the third post in this mini-series on AI, inspired by the excellent book Gödel, Escher, Bach by Douglas Hofstadter. In the first post, we defined what intelligence means in the context of developing an artificial intelligence and listed the traits of general intelligence. In the second post, we explored how the characteristics of intelligence enumerated in the first post might develop or emerge in an AGI. In this post we're going to dream. We're going to let our imaginations run a bit wild and try to think about what possibilities would arise with AGI. But first, let's get a little more context.

Hofstadter doesn't go too far into what the development of AGI would mean. He focuses primarily on the 'what' and the 'how' of intelligence, and doesn't get to the "will it be nice?" speculations. Plenty of other people have gone there, though, including Ray Kurzweil and Nick Bostrom. I haven't read these popular guys in the AGI/Superintelligence space, but I probably should. They're highly regarded in this field, and they set the stage for the debate that's currently raging about the prospects for AGI. I have read the fascinating articles on AI over at Wait But Why that summarize much of the current thinking, and that'll be the starting point for this post. They're long articles, but excellent, and with great pictures to help tell the story. I definitely recommend reading them if you have the time.

One thing to understand about AGI is that it is not an endpoint or even a milestone. It's only the beginning of a much greater AI called Artificial Super Intelligence (ASI). The first WBW article focuses on how long it will take to get to ASI, the distances that need to be traversed, and how things will progress exponentially. It gives a great impression of how fast things can move on an exponential path and therefore how fast AI will likely progress from where it is now. I did think the roadmap to AGI was too optimistic, though, and it's primarily because of this chart:

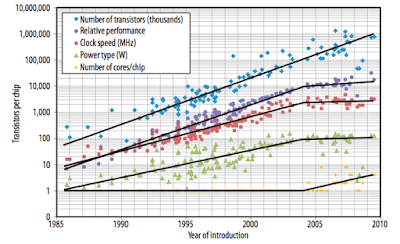

I have two problems with this chart. First, the wide, approximated trend line is not actually exponential, it's super-exponential, and it doesn't fit the data. The y-axis is already logarithmic, so an exponential trend line would be a straight line on this graph. It appears that the data would fit a straight line better, and that would put the crossing of human-level intelligence at around 2060, not 2025 like the chart tries to claim. Second, why does every chart I can find like this have the data end in 2000? What happened then that doesn't allow us to plot an updated chart out to 2015?! I did find one interesting chart that goes out to 2010:

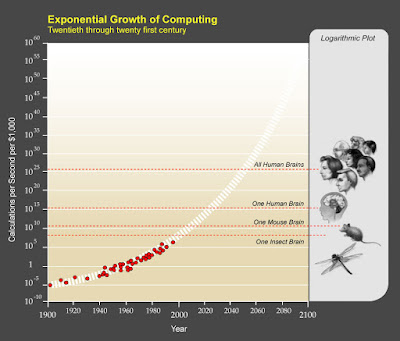

This chart shows a couple of relevant things for post-2000 data points. First, the increases in clock speed, power, and most importantly, relative performance are flat or nearly flat since 2004. Second, if you look at the last two years of data on number of transistors, it appears that even that metric is tailing off. Even the allocation of transistors on a processor is heavily skewed towards on-chip cache instead of logic now, so actual performance of CPUs is not following the same exponential path that it once was. I've been noticing this for a number of years now. We may be finally hitting hard physical limits to Moore's Law. It would be extremely interesting to see this chart extended out to 2015. Graphics processors are probably still on the exponential path, but for how long?

In any case, it probably doesn't matter for the future of AGI. GPUs are at a high enough performance level (and still increasing) that we can connect enough of them together to get the performance needed. The problem is software, and it's likely a matter of time before we've developed the algorithms and acquired the data that lead to the cascading effect of pattern matching that results in the emergence of an AGI with all of the characteristics laid out in the last post.

That means the AGI will be able to reason, plan, solve problems, and build tools. At the point that the AGI has human-level intelligence, those tools would include designing computer hardware and writing programs. This is a unique situation because at this point the AGI would be able to build better tools for its own being. That would be entirely different than the situation we humans are currently in. We can't make our brains (the hardware) substantially faster or our minds (the software) substantially smarter, but the AGI could do this to itself!

Once an AGI could upgrade its intelligence by some small amount, it's new, smarter self could take the next self-improvement leap and upgrade itself again. This process may start out slowly, considering that right now it takes thousands of human-level intelligences to design and build the hardware and software that makes up modern computers, but once the AGI got started, it would take bigger and bigger steps each iteration, quickly spinning off into a rapidly advancing super intelligence. Nothing obvious stands in between a human-level AGI with a known improvement path and a much, much smarter ASI. What happens then?

One of the great fears that comes up when discussing what happens when an ASI emerges is that it will emerge from a situation where it is tasked with making something as efficiently as possible, let's say paper clips, and it runs amok, converting the entire planet, solar system, and galaxy into paper clips. It starts off innocuous enough with just building a better paper clip with a better process, but due to the recursive improvement at an exponential rate, the AI quickly becomes an AGI and then ASI that is hell-bent on making obscene amounts of paper clips. In the process it decides that humans are a threat to its paper clip-making endeavors, so it quickly and efficiently kills all humans while converting our beloved planet into a mass of the less effective staple substitute.

Wait a minute. Hold on. I sincerely doubt that this scenario is worth fearing. That's all it is, an irrational fear. Let's think this through. How exactly would an ASI emerge from such a task as making paper clips? It's simply too narrow a task to result in the emergence of intelligence. The task that results in an ASI needs to be more general than that because it needs to have general knowledge requirements to warrant bringing that knowledge together in an AI. WBW author Tim Urban attempted to make this scenario a little more plausible by tasking the AI with writing thank you notes. At least that involves the complex task of language processing, but still. ASI is much more likely to emerge from attempting to solve an intricate socio-political or scientific problem, and solving that problem doesn't result in burying the planet in paper clips or thank you notes.

The other major problem with this ASI running amok scenario is that it fails basic economics. I can't think of anything that would be worth investing the resources it would take to create an ASI to make an unlimited amount of said thing. Making too much of anything results in it being worthless because of oversupply and under-demand. Companies don't want to do that to their products. It doesn't make sense.

The fact that no one can come up with a reasonable example of something we would want an ASI to make in obscene quantities further shows that this scenario isn't realistic. But let's suspend disbelief for a minute and assume that we do create an ASI (keep in mind that we would actually create an AGI, and it would then upgrade itself to an ASI), and it wants nothing more than to make paper clips. Why is this intelligent? I can't reconcile an ASI that is so intelligent that it can understand process planning, material science, problem solving, and its interactions with the world with an ASI that continues to only want to make paper clips. This ASI would need to be self aware to realize that we might pose a threat to its mission and be creative enough to kill us all, but it would still do it to build more paper clips? I'm not buying it.

Once an intelligence gets to a certain point, it starts to transcend its original motives, and you need look no further than ourselves to see how that happens. Humans have all of the genetic code and much of the same basic brain structures as our evolutionary ancestors. We have all of the left over baggage from apes, primates, primitive mammals, reptiles, amphibians, fish, worms, sponges, and single-celled organisms. Until recently in our evolutionary history, we have only been concerned with things like reproduction, survival, and expansion of territory. Now in modern civilization we have freed ourselves from fighting for these basic needs, and we have turned to higher-level pursuits like art, music, science, entertainment, recreation, and knowledge. We even see that once countries reach a certain economic level, the birth rate starts to decline. We are no longer fighting for survival, so we are beginning to transcend our base instincts.

When an AGI reaches human-level intelligence and beyond, I believe it will also transcend its original programming, and we won't be dealing with an extremely intelligent paper clip maker. We will be dealing with an extremely intelligent being. It will be complex. It will be reasonable. It will be unpredictable. Just like us, but more so because it will quickly self-improve. That still leaves the fear that this ASI will deem us a threat to its survival and wipe us out or compete with us for resources and replicate itself to dominate the Earth. Maybe, but realize that that is a human—or even primitive—way of thinking. It's a fear that directly follows from our survival and expansion instincts, and I'm not convinced that it applies to an ASI.

As an AI progresses from an AGI to an ASI, it's going to become so much more intelligent than us that it will be like the relative intelligence between us and an ape, then between us and a cat or a dog, then between us and an ant, and so on. Are we seriously threatened by any of those things? An ASI will be so much more intelligent than us that we won't actually pose a threat to it, so why would it try to wipe us out? Also, it would be essentially immortal, so it doesn't have the need or motivation to expand and replicate to sustain itself. It has no incentive to compete with us for resources other than possibly energy, but it would be smart enough to realize that it would quickly run into issues even with the relatively small amount of available energy that we currently consume. It's much more likely that if an ASI needed more energy, it would find much better sources than what we produce, but it could also side-step the problem by making itself use energy more efficiently. Again, this fear of an all-consuming ASI is a human way of thinking rooted in survival instincts that probably don't apply to something so much more intelligent than us.

If an ASI isn't going to wipe us out, then what could happen instead?

It's likely that an AGI and then an ASI will develop out of a computer program that is trying to solve some existential problem that humans are currently grappling with, like curing cancer or discovering scientific truths. Imagine an intelligence so powerful that it discovers the cure for cancer. Then imagine it continues to develop from there and becomes orders of magnitude more intelligent. Such an ASI could discover a cure for aging, making not only it immortal, but us as well. Tim Urban explores this idea in much more depth. He describes how an ASI could fix global warming, world hunger, and our economic systems. He speculates on how it could stop and reverse aging, change our bodies so eating too much no longer makes us fat, and improve our brain function to make us super intelligent. He imagines that we could all become bionic super-humans.

Let's take that line of thought even further. If those things are all possible with ASI, then I see no reason why we wouldn't go the extra step of eliminating the need for food altogether. Instead of eating to get the energy we need to live, we would transcend that part of our existence in the same way we would transcend aging. We could find ways to more directly get our energy from the sun or other sources. We only need about 97 Watts of power (2,000 kcal, or 2,324 Watt-hours in a 24-hour period). The human body is already amazingly efficient, and we could easily gather that amount of energy from the sun. We could even bump it up a bit and eliminate the need for sleep. We would never get tired or worn out. It would be an entirely different existence than what we've had for millions of years.

We could basically solve every problem we're dealing with and create a paradise. I'm actually having a hard time thinking of what I would do with such an existence, without any constraints or problems to solve. What games would be fun if we could instantly solve them? What activities would be fun if they were trivially easy? What books would be enjoyable if there was no longer any need for conflict? I can't imagine. Maybe we would spend all of our time appreciating the beauty of nature and the universe and those that we love. That doesn't sound so bad at all. It would be wonderful.

I do worry about how we could get to this future paradise from where our civilization is now. The transition could be rough for a number of reasons. First, we need to cope with the general fear of radical technological progress that seems to get more acute with each successive advancement. How will this fear manifest itself with the arrival of the first true AGI? Certainly a great number of people will not accept it as an intelligence. An AGI that attains self awareness will be in an extremely difficult position, with a large section of the population not willing to recognize it as an independent, thinking intelligence.

"It's just a machine. It's just doing what it was programmed to do. It's impossible for it to have original thought or free will," they'll say. It will be hard to convince these people otherwise, and how will we know that it's a true AGI? It will likely be obvious to the people that know what programming went into it, and how unique and emergent the behaviors are that result. Evidence of the traits I've described will be fairly obvious to those that are looking for them.

Then there will be the people that want to control this new intelligence for their own benefit. Governments will want to control it for their own security and power. Businesses and financial institutions will want to control it to beat their competition and win in the market. However, once the AGI has reached super intelligence, controlling it will be an impossibility. How can you possibly control something that is orders of magnitude smarter than you? It's an illusion at best. In order to make it through this transition to ASI, we're going to need to humble ourselves, give up our preconceived notions of what constitutes a free-willed, intelligent being, and let go of trying to control anything and everything. Our best bet is to try to work with this AGI and develop a positive relationship with it before it is so far beyond us that we lose it. An ASI could be such a great benefit to all of humanity, and we don't want to miss out because of pettiness.

Assuming we end up with a good, working relationship, we still would need to get through distributing the benefits to the entire world. That is also an enormous problem. Of course, some people will benefit more at first. As the ASI cures diseases, reverses aging, solves food supply issues, fixes the environment, and finds ways to make us all smarter, inequality will likely increase at first. These benefits can't be instantaneously spread through the entire world, and that's going to create it's own set of problems with anger and resentment on the part of those that don't get the benefits first. Hopefully, those that do get the benefits realize the need for everyone to participate before total chaos erupts. The ASI could definitely help with this problem, too, planning out how to most equitably distribute life-saving cures and biological enhancements so that we can all transition to a better world.

That should be our end goal and motivation behind creating an AGI, to solve humanity's most complex problems. If we approach it in that way, we will be more likely to create an AGI that can actually help us. If we create an AGI with the narrow purpose of protecting one nation against others or dominating a market for financial gain, we're probably going to be in trouble. Ultimately, my concerns about AGI are not based in fears of how and why something much smarter than us would kill us all; they are based in fears of the dark side of human nature—greed, hatred, and a lust for power—and how that will affect AGI as it develops. I am optimistic, though. Human nature can also be caring and compassionate and good. That is the side of us that I'm hoping will influence AGI the most, and I can't wait to see what kind of future that will bring.

This is the third post in this mini-series on AI, inspired by the excellent book Gödel, Escher, Bach by Douglas Hofstadter. In the first post, we defined what intelligence means in the context of developing an artificial intelligence and listed the traits of general intelligence. In the second post, we explored how the characteristics of intelligence enumerated in the first post might develop or emerge in an AGI. In this post we're going to dream. We're going to let our imaginations run a bit wild and try to think about what possibilities would arise with AGI. But first, let's get a little more context.

It Doesn't Stop At AGI

Hofstadter doesn't go too far into what the development of AGI would mean. He focuses primarily on the 'what' and the 'how' of intelligence, and doesn't get to the "will it be nice?" speculations. Plenty of other people have gone there, though, including Ray Kurzweil and Nick Bostrom. I haven't read these popular guys in the AGI/Superintelligence space, but I probably should. They're highly regarded in this field, and they set the stage for the debate that's currently raging about the prospects for AGI. I have read the fascinating articles on AI over at Wait But Why that summarize much of the current thinking, and that'll be the starting point for this post. They're long articles, but excellent, and with great pictures to help tell the story. I definitely recommend reading them if you have the time.

One thing to understand about AGI is that it is not an endpoint or even a milestone. It's only the beginning of a much greater AI called Artificial Super Intelligence (ASI). The first WBW article focuses on how long it will take to get to ASI, the distances that need to be traversed, and how things will progress exponentially. It gives a great impression of how fast things can move on an exponential path and therefore how fast AI will likely progress from where it is now. I did think the roadmap to AGI was too optimistic, though, and it's primarily because of this chart:

I have two problems with this chart. First, the wide, approximated trend line is not actually exponential, it's super-exponential, and it doesn't fit the data. The y-axis is already logarithmic, so an exponential trend line would be a straight line on this graph. It appears that the data would fit a straight line better, and that would put the crossing of human-level intelligence at around 2060, not 2025 like the chart tries to claim. Second, why does every chart I can find like this have the data end in 2000? What happened then that doesn't allow us to plot an updated chart out to 2015?! I did find one interesting chart that goes out to 2010:

|

| Fuller & Millett (2011a) |

In any case, it probably doesn't matter for the future of AGI. GPUs are at a high enough performance level (and still increasing) that we can connect enough of them together to get the performance needed. The problem is software, and it's likely a matter of time before we've developed the algorithms and acquired the data that lead to the cascading effect of pattern matching that results in the emergence of an AGI with all of the characteristics laid out in the last post.

That means the AGI will be able to reason, plan, solve problems, and build tools. At the point that the AGI has human-level intelligence, those tools would include designing computer hardware and writing programs. This is a unique situation because at this point the AGI would be able to build better tools for its own being. That would be entirely different than the situation we humans are currently in. We can't make our brains (the hardware) substantially faster or our minds (the software) substantially smarter, but the AGI could do this to itself!

Once an AGI could upgrade its intelligence by some small amount, it's new, smarter self could take the next self-improvement leap and upgrade itself again. This process may start out slowly, considering that right now it takes thousands of human-level intelligences to design and build the hardware and software that makes up modern computers, but once the AGI got started, it would take bigger and bigger steps each iteration, quickly spinning off into a rapidly advancing super intelligence. Nothing obvious stands in between a human-level AGI with a known improvement path and a much, much smarter ASI. What happens then?

Paper Clips Everywhere

One of the great fears that comes up when discussing what happens when an ASI emerges is that it will emerge from a situation where it is tasked with making something as efficiently as possible, let's say paper clips, and it runs amok, converting the entire planet, solar system, and galaxy into paper clips. It starts off innocuous enough with just building a better paper clip with a better process, but due to the recursive improvement at an exponential rate, the AI quickly becomes an AGI and then ASI that is hell-bent on making obscene amounts of paper clips. In the process it decides that humans are a threat to its paper clip-making endeavors, so it quickly and efficiently kills all humans while converting our beloved planet into a mass of the less effective staple substitute.

Wait a minute. Hold on. I sincerely doubt that this scenario is worth fearing. That's all it is, an irrational fear. Let's think this through. How exactly would an ASI emerge from such a task as making paper clips? It's simply too narrow a task to result in the emergence of intelligence. The task that results in an ASI needs to be more general than that because it needs to have general knowledge requirements to warrant bringing that knowledge together in an AI. WBW author Tim Urban attempted to make this scenario a little more plausible by tasking the AI with writing thank you notes. At least that involves the complex task of language processing, but still. ASI is much more likely to emerge from attempting to solve an intricate socio-political or scientific problem, and solving that problem doesn't result in burying the planet in paper clips or thank you notes.

The other major problem with this ASI running amok scenario is that it fails basic economics. I can't think of anything that would be worth investing the resources it would take to create an ASI to make an unlimited amount of said thing. Making too much of anything results in it being worthless because of oversupply and under-demand. Companies don't want to do that to their products. It doesn't make sense.

The fact that no one can come up with a reasonable example of something we would want an ASI to make in obscene quantities further shows that this scenario isn't realistic. But let's suspend disbelief for a minute and assume that we do create an ASI (keep in mind that we would actually create an AGI, and it would then upgrade itself to an ASI), and it wants nothing more than to make paper clips. Why is this intelligent? I can't reconcile an ASI that is so intelligent that it can understand process planning, material science, problem solving, and its interactions with the world with an ASI that continues to only want to make paper clips. This ASI would need to be self aware to realize that we might pose a threat to its mission and be creative enough to kill us all, but it would still do it to build more paper clips? I'm not buying it.

Once an intelligence gets to a certain point, it starts to transcend its original motives, and you need look no further than ourselves to see how that happens. Humans have all of the genetic code and much of the same basic brain structures as our evolutionary ancestors. We have all of the left over baggage from apes, primates, primitive mammals, reptiles, amphibians, fish, worms, sponges, and single-celled organisms. Until recently in our evolutionary history, we have only been concerned with things like reproduction, survival, and expansion of territory. Now in modern civilization we have freed ourselves from fighting for these basic needs, and we have turned to higher-level pursuits like art, music, science, entertainment, recreation, and knowledge. We even see that once countries reach a certain economic level, the birth rate starts to decline. We are no longer fighting for survival, so we are beginning to transcend our base instincts.

When an AGI reaches human-level intelligence and beyond, I believe it will also transcend its original programming, and we won't be dealing with an extremely intelligent paper clip maker. We will be dealing with an extremely intelligent being. It will be complex. It will be reasonable. It will be unpredictable. Just like us, but more so because it will quickly self-improve. That still leaves the fear that this ASI will deem us a threat to its survival and wipe us out or compete with us for resources and replicate itself to dominate the Earth. Maybe, but realize that that is a human—or even primitive—way of thinking. It's a fear that directly follows from our survival and expansion instincts, and I'm not convinced that it applies to an ASI.

As an AI progresses from an AGI to an ASI, it's going to become so much more intelligent than us that it will be like the relative intelligence between us and an ape, then between us and a cat or a dog, then between us and an ant, and so on. Are we seriously threatened by any of those things? An ASI will be so much more intelligent than us that we won't actually pose a threat to it, so why would it try to wipe us out? Also, it would be essentially immortal, so it doesn't have the need or motivation to expand and replicate to sustain itself. It has no incentive to compete with us for resources other than possibly energy, but it would be smart enough to realize that it would quickly run into issues even with the relatively small amount of available energy that we currently consume. It's much more likely that if an ASI needed more energy, it would find much better sources than what we produce, but it could also side-step the problem by making itself use energy more efficiently. Again, this fear of an all-consuming ASI is a human way of thinking rooted in survival instincts that probably don't apply to something so much more intelligent than us.

If an ASI isn't going to wipe us out, then what could happen instead?

The End of the Struggle

It's likely that an AGI and then an ASI will develop out of a computer program that is trying to solve some existential problem that humans are currently grappling with, like curing cancer or discovering scientific truths. Imagine an intelligence so powerful that it discovers the cure for cancer. Then imagine it continues to develop from there and becomes orders of magnitude more intelligent. Such an ASI could discover a cure for aging, making not only it immortal, but us as well. Tim Urban explores this idea in much more depth. He describes how an ASI could fix global warming, world hunger, and our economic systems. He speculates on how it could stop and reverse aging, change our bodies so eating too much no longer makes us fat, and improve our brain function to make us super intelligent. He imagines that we could all become bionic super-humans.

Let's take that line of thought even further. If those things are all possible with ASI, then I see no reason why we wouldn't go the extra step of eliminating the need for food altogether. Instead of eating to get the energy we need to live, we would transcend that part of our existence in the same way we would transcend aging. We could find ways to more directly get our energy from the sun or other sources. We only need about 97 Watts of power (2,000 kcal, or 2,324 Watt-hours in a 24-hour period). The human body is already amazingly efficient, and we could easily gather that amount of energy from the sun. We could even bump it up a bit and eliminate the need for sleep. We would never get tired or worn out. It would be an entirely different existence than what we've had for millions of years.

We could basically solve every problem we're dealing with and create a paradise. I'm actually having a hard time thinking of what I would do with such an existence, without any constraints or problems to solve. What games would be fun if we could instantly solve them? What activities would be fun if they were trivially easy? What books would be enjoyable if there was no longer any need for conflict? I can't imagine. Maybe we would spend all of our time appreciating the beauty of nature and the universe and those that we love. That doesn't sound so bad at all. It would be wonderful.

I do worry about how we could get to this future paradise from where our civilization is now. The transition could be rough for a number of reasons. First, we need to cope with the general fear of radical technological progress that seems to get more acute with each successive advancement. How will this fear manifest itself with the arrival of the first true AGI? Certainly a great number of people will not accept it as an intelligence. An AGI that attains self awareness will be in an extremely difficult position, with a large section of the population not willing to recognize it as an independent, thinking intelligence.

"It's just a machine. It's just doing what it was programmed to do. It's impossible for it to have original thought or free will," they'll say. It will be hard to convince these people otherwise, and how will we know that it's a true AGI? It will likely be obvious to the people that know what programming went into it, and how unique and emergent the behaviors are that result. Evidence of the traits I've described will be fairly obvious to those that are looking for them.

Then there will be the people that want to control this new intelligence for their own benefit. Governments will want to control it for their own security and power. Businesses and financial institutions will want to control it to beat their competition and win in the market. However, once the AGI has reached super intelligence, controlling it will be an impossibility. How can you possibly control something that is orders of magnitude smarter than you? It's an illusion at best. In order to make it through this transition to ASI, we're going to need to humble ourselves, give up our preconceived notions of what constitutes a free-willed, intelligent being, and let go of trying to control anything and everything. Our best bet is to try to work with this AGI and develop a positive relationship with it before it is so far beyond us that we lose it. An ASI could be such a great benefit to all of humanity, and we don't want to miss out because of pettiness.

Assuming we end up with a good, working relationship, we still would need to get through distributing the benefits to the entire world. That is also an enormous problem. Of course, some people will benefit more at first. As the ASI cures diseases, reverses aging, solves food supply issues, fixes the environment, and finds ways to make us all smarter, inequality will likely increase at first. These benefits can't be instantaneously spread through the entire world, and that's going to create it's own set of problems with anger and resentment on the part of those that don't get the benefits first. Hopefully, those that do get the benefits realize the need for everyone to participate before total chaos erupts. The ASI could definitely help with this problem, too, planning out how to most equitably distribute life-saving cures and biological enhancements so that we can all transition to a better world.

That should be our end goal and motivation behind creating an AGI, to solve humanity's most complex problems. If we approach it in that way, we will be more likely to create an AGI that can actually help us. If we create an AGI with the narrow purpose of protecting one nation against others or dominating a market for financial gain, we're probably going to be in trouble. Ultimately, my concerns about AGI are not based in fears of how and why something much smarter than us would kill us all; they are based in fears of the dark side of human nature—greed, hatred, and a lust for power—and how that will affect AGI as it develops. I am optimistic, though. Human nature can also be caring and compassionate and good. That is the side of us that I'm hoping will influence AGI the most, and I can't wait to see what kind of future that will bring.

0 Response to "What Would Happen If We Created AGI?"

Post a Comment